Wordle Solver

Overview

I created a jupyter notebook program in python that solves Wordle puzzles.

For anyone unfamiliar with Wordle, it is a popular puzzle game that gives you 6 chances to guess a 5-letter word. After each guess you make, the game offers feedback in the form of green squares for each of your letters that is in the correct position and yellow squares for each of your letters that belongs to the word but is not in the correct position.

You enter each of your guesses and results into my program as you play each turn of the game, and my program will tell you 1) the list of possible words that could be the answer, and 2) what it recommends for your next guess.

To explain my program properly, I'll start with a glossary of terms I use.

Glossary

word: a 5-character string of letters from the alphabet.

Words are customarily represented in all upper case.

guess: a word that may be entered in a Wordle game.

book: a set of possible guesses.

Wordle does not let you enter just any 5-letter sequence you want, such as RSTLN, because RSTLN is not in Wordle's book. I'm not certain whether the entirety of Wordle's book is generally known or available. My program allows you to choose what book you wish to use.

answer: the correct answer to a Wordle game.candidate: a potential answer to a Wordle game.

field: a set of candidates.

The "initial" field refers to the field of candidates for a given puzzle before any guesses are made. Note that the initial field is much like a book, but it does not necessarily have to be the same as the book for a puzzle. For example, there are words such as SLAVE that are known to be possible guesses but not possible answers. How do I know this? The New York Times, which hosts Wordle, has an analysis feature you can use after you have completed a game. This feature shows you how good your guesses were and includes information on how many candidates exist (a field) after each guess. In comparing notes with other Wordle players, I've learned that some guesses such as SLAVE do not reduce the number of candidates. Therefore such words do not belong to Wordle's initial field. But since you can guess them, they do belong to Wordle's book.

score: a 5-character, color-coded response to a guess by the Wordle game.

For example, YY__G is a score. Wordle players would recognize this as two yellow squares, followed by two neutral squares and one green square. There are 3*3*3*3*3 = 243 unique scores.

scorebook: a 3-level dictionary containing guesses --> scores --> fields.

At the highest level, a scorebook is a list of guesses taken from a given book. At the second level, each guess has the list of all 243 possible scores. At the third level, each guess --> score has a specific field that corresponds to the guess and score it belongs to. For example, under the guess SLATE and the score G_GGG, the field might consist of the words SKATE, SPATE, and STATE. Depending on circumstances, the field for SLATE --> G_GGG may not even contain all three of these candidates (if for example a "P" had been eliminated in a previous guess). Many of these fields are empty, but some can be quite long.

gamestate: a book, a field, and the corresponding scorebook.

Program feature #1 — generating fields of candidates

A scorebook can be seen as a branching tree of all possible outcomes of the current guess. A player selects a guess; that guess generates a score; the corresponding field becomes the new field of candidates for the next guess.

The gamestate is updated following each guess. The book of a gamestate stays the same; the new field is inherited from the previous scorebook as described above; the scorebook is updated so that each guess --> score --> field is populated only with a subset of words from the field as determined by the previous guess and score.

A consideration of strategy

Thus far I have described how the program performs its first feature, which is to generate a new field of candidates. There may be better ways to accomplish this, but any two programs that do it correctly should have identical outputs.

The second feature the program performs is to recommend the best next guess. This notion of "best" is necessarily subjective, though, depending on a player's risk tolerance. Consider the situation below.

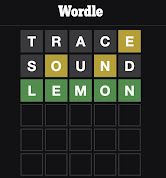

SOUND could not have been the correct answer, because it had already been determined by guess #1 (and score #1) that the answer contained an E. A guess like SWELL had a better chance of being correct on guess #2, but SOUND eliminates more candidates, likely increasing the chances of ultimately winning with fewer guesses.

A more common example would be if you know that the answer ends in something like -RAIN. You might try BRAIN, DRAIN, or GRAIN, but this could take between 1-3 more guesses. If, however, you sacrifice a guess on a non-candidate such as BUDGE, then you can learn which of the 3 candidates is correct and guarantee that you'll solve in exactly 2 more guesses.

Early on in the Wordle fad I identified this choice of strategies as the Tin Cup phenomenon. In that movie, Kevin Costner's character attempts to drive a golf ball onto the green in one stroke instead of two but repeatedly ends up in the water hazard.

This represents the only possible difference in strategies that I can think of: strategy #1 — only guess candidates, so that every guess stands a chance of solving the puzzle, or strategy #2 — sometimes guess non-candidates in order to lower the expected value of the number of guesses required.

The game actually offers a "hard mode" option that forces you to adopt strategy #1. I vehemently object to calling this "hard" mode. It is "hard" in that it reduces your options and typically increases the number of guesses required to solve. But calling it "hard mode" makes it seem as though it would require more strategic thinking, when in fact it requires less. "Hard mode" should mean "difficult" in the sense that more sophisticated thought is somehow rewarded, which is not the case here.

My program adopts strategy #2, which is to lower the expected value of the number of guesses required, although it can be easily adapted to strategy #1 by updating the book in each turn to be equal to the field.

Program feature #2 — determine the best next guess

I'm not 100% certain that my method accomplishes this feature correctly. It's pretty good though.

The determination of the best next guess begins as follows. Starting from a given gamestate, consider any of the guesses in its book. Depending on which score that guess receives, it may generate a short or long field of candidates. (If it generates an empty field, then there has been an error: this would mean the actual answer did not belong to the initial field, which it always should.) How should we evaluate a guess? Short fields are surely good. Do we want the guess with the shortest average field, or the shortest maximum field (or something else)? I'm not entirely sure. The shortest maximum would be easier to find. My program calculates the shortest average, but some mathematical modification to this "average" is warranted.

To calculate this average, it wouldn't make sense to divide the sum of all field lengths by 243, because we know that a guess will only receive a score corresponding to one of its non-empty fields. (If a guess has one field of 50 candidates and 242 empty fields, then this guess is certainly worse than a guess that has 243 fields of exactly one candidate each.) So we should limit our denominator to non-empty fields. But this actually isn't the right denominator either.

Consider the (partial) scorebook depicted above: one guess, AGILE, has two non-empty fields of lengths 9 and 1, and a second guess, AWARE, has two non-empty fields of lengths 6 and 6. Their average field lengths are 5 and 6, but is the one with the lower average more desirable? These averages are misleading, because in each case it's not the fields that are equally likely; the candidates are equally likely. What we need then is a weighted average. In the case of the first guess, the probability that the correct answer will generate the score whose field length is 9 is 90% (9/10), so its weighted average is not 5, but actually much closer to 9.

9 * 90% + 1 * 10% = 8.2

There is another adjustment we need to make. How bad is a long field? A field of 2 candidates is significantly worse than a field of 1 candidate, but a field of 11 candidates is not as much worse than a field of 10 candidates. This feels intuitive. But why is it?

It's because the larger a field is, the more candidates you can (probably) eliminate in one guess. This means that we should measure fields using logarithms.

The base of the logarithm doesn't actually matter, as long as a consistent base is used. My program uses base 2. The important thing is that a field length of 225 is seen as "twice as bad" as a field length of 15 rather than "15 times as bad". Here's the math: If there are 225 remaining candidates and guesses typically reduce fields to 1/5 their size, then how many guesses should this take? The answer would be the logarithm of 225 in base 5, about 3.37. In the case of 15 remaining candidates, it would take about 1.68 guesses. If we do the same calculations assuming 1/3 instead of 1/5 (so logarithms in base 3 instead of base 5) then the corresponding numbers would be 4.93 and 2.96. In either case, a field of 225 candidates is expected to take twice as many guesses as a field of 15 candidates.

So here it all is in one huge mouthful: my program returns the guess with the lowest expected value of the number of further required guesses by calculating the weighted average of the logarithms of its non-empty field lengths.

Variations of play

By the time I created this game, I had written and collected many, many notes consisting of fields of candidates I had brainstormed for various situations, what I would now call scorebook entries.

For a long time, every time I played, I would use STERN for my first guess and receive a corresponding score. If I had never received this score before, I would proceed to brainstorm as many candidates as I could (creating a field). But if I had received this score and already made notes for it, I only had to look at those existing notes and form my next guess based on that field.

In this way I generated well over 1,000 candidates corresponding to several dozens of scores. One way to use my program is to limit my book and field to only the words I generated in my notes. This often results in the program returning an empty field and no best recommended guess, because the answer is a word I had never written down in my notes.

This is an example of using custom sets of words for the book and/or initial field.

Further exploration

Perhaps what I should do next is add the Wordle feature itself so that I can generate random words to solve. Then I could generate entire new games using custom sets of words. I could import the Oxford English Dictionary and play Wordle games with that expanded set of candidates.

Comments

Post a Comment